| Uploader: | Jrcal |

| Date Added: | 18.01.2019 |

| File Size: | 9.25 Mb |

| Operating Systems: | Windows NT/2000/XP/2003/2003/7/8/10 MacOS 10/X |

| Downloads: | 39978 |

| Price: | Free* [*Free Regsitration Required] |

Set Up AWS CLI and Download Your S3 Files From the Command Line | Viget

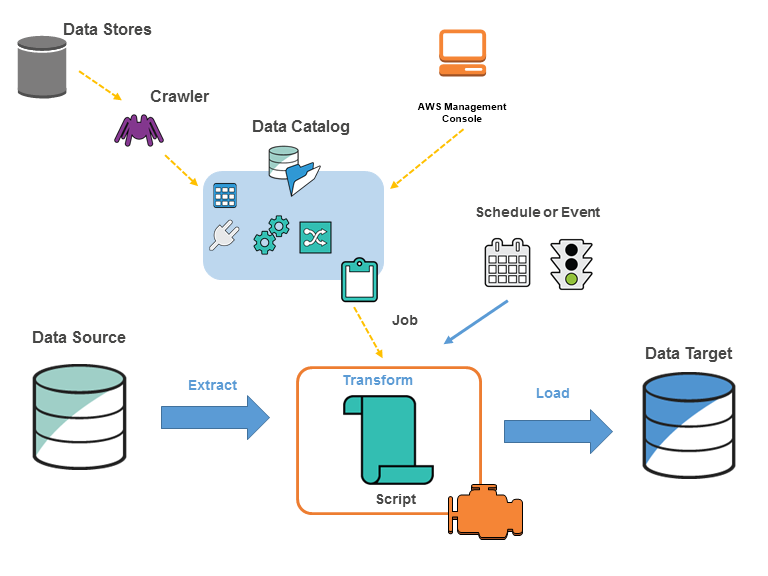

Jan 25, · The other day I needed to download the contents of a large S3 folder. That is a tedious task in the browser: log into the AWS console, find the right bucket, find the right folder, open the first file, click download, maybe click download a few more times until something happens, go back, open the next file, over and www.tumblr.com: Henry Bley-Vroman. Jun 12, · Move as one file, tar everything into a single archive file. Create S3 bucket in the same region as your EC2/EBS. Use AWS CLI S3 command to upload file to S3 bucket. Use AWS CLI to pull the file to your local or wherever another storage is. This will . When you upload large files to Amazon S3, it's a best practice to leverage multipart uploads. If you're using the AWS Command Line Interface (AWS CLI), all high-level aws s3 commands automatically perform a multipart upload when the object is large. These high .

How to download large file from aws

How can I do that? Depending on your use case, you can perform the data transfer between buckets using one of the following options:. You can split the transfer into multiple mutually exclusive operations to improve the transfer time by multi-threading. You can create more upload threads while using the --exclude and --include parameters for each instance of the AWS CLI, how to download large file from aws. These parameters filter operations by file name. Note: The --exclude and --include parameters are processed on the client side.

Because of this, the resources of your local machine might affect the performance of the operation. For example, to copy a large amount of data from one bucket to another where all the file names begin with a number, you can run the following commands on two instances of the AWS CLI.

First, run this command to copy the files with names that begin with the numbers 0 through Then, run this command to copy the files with names that begin with the numbers 5 through Consider building a custom application using an AWS SDK to perform the data transfer for a very large number of objects.

While the AWS CLI can perform the copy operation, a custom application might be more efficient at performing a transfer at the scale of hundreds of millions of objects. After you set up cross-Region replication CRR or same-Region replication SRR on the source bucket, Amazon S3 automatically and asynchronously replicates new objects from the source bucket to the destination bucket.

You can choose to filter which objects are replicated using a prefix or tag. For more information on configuring replication and specifying a filter, see Replication Configuration Overview. After replication is configured, only new objects are replicated to the destination bucket.

Existing objects are not replicated to the destination bucket. To replicate existing objects, how to download large file from aws can run the following cp command after setting up replication on the source bucket:. This command copies objects in the source bucket back into the source bucket, how to download large file from aws, which triggers replication to the destination bucket. Note: It's a best practice to test the cp command in a non-production environment.

Doing so allows you to configure the parameters for your exact use case. You can use Amazon S3 batch operations to copy multiple objects with a single request. When you create a batch operation jobyou specify which objects to perform the operation on using an Amazon S3 inventory report or a CSV file, how to download large file from aws.

After the batch operation job is complete, you get a notification and you can choose to receive a completion report about the job. Then, the operation writes the files from the worker nodes to the destination bucket. What's the best way to transfer large amounts of data from one Amazon S3 bucket to another?

Last updated: This setting how to download large file from aws you to break down a larger file for example, MB into smaller parts for quicker upload speeds. Note: A multipart upload requires that a single file is uploaded in not more than 10, distinct parts. You must be sure that the chunksize that you set balances the part file size and the number of parts.

The default value is You can increase it to a higher value like Note: Running more threads consumes more resources on your machine. You must be sure that your machine has enough resources to support the maximum amount of concurrent requests that you want.

Use cross-Region replication or same-Region replication After you set up cross-Region replication CRR or same-Region replication SRR on the source bucket, Amazon S3 automatically and asynchronously replicates new objects from the source bucket to the destination bucket.

Use Amazon S3 batch operations You can use Amazon S3 batch operations to copy multiple objects with a single request. Did this article help you? Anything we could improve? Let us know. Need more help? Contact AWS Support.

Amazon S3 – Upload Download large files to S3 with SpringBoot

, time: 19:57How to download large file from aws

Use an AWS SDK. Consider building a custom application using an AWS SDK to perform the data transfer for a very large number of objects. While the AWS CLI can perform the copy operation, a custom application might be more efficient at performing a transfer at the scale of hundreds of millions of objects. Mar 17, · Presume you've got an S3 bucket called my-download-bucket, and a large file, already in the bucket, called www.tumblr.com: In your AWS console, navigate to S3, then to your my-download-bucket. Right click on www.tumblr.com, and se. Oct 19, · I'm trying to download 1 large file more than G. The logic of the work as I understand it - if a large file, it is loaded in parts, to load 2 parts of the file there must be a shift in the file where it is downloaded, so that the data that has already downloaded does not overwrite, it does not happen, either with Download or with MultipleFileDownload.

No comments:

Post a Comment